- It serves as the foundation of algorithm analysis, categorized into two three type of analysis.

- These notations are mathematical tool used to analyze the performance of algorithms by understanding how their efficiency changes as the input size grows.

- These notations provide a concise way to express the behavior of an algorithm’s time or space complexity as the input size approaches infinity.

- Rather than comparing algorithm directly, asymptotic analysis focuses on understanding the relative growth rates of algorithms complexities.

- According to the input size increases these notations are used to analyze the performance or time and space complexity

There are many asymptotic notations:

1)Big-O Notation (O-notations) (worst case time complexity)

2)Big-O (Upper Bound)

3)Omega Notation(Ω-notations) (Best case time complexity)

4)Big Omega (Lower Bound)

5)Theta Notation(Θ-notations) (average case time complexity)

6)Big Theta (Tight Bound)

7)Small-O (Strictly Less Than)

8)Small-Omega (Strictly Greater Than)

But mainly there are three notations :-

- Big-O Notation (O-notations) (worst case time complexity)

- Omega Notation(Ω-notations) (Best case time complexity)

- Theta Notation(Θ-notations) (average case time complexity)

1)Big-O notations: –

- Big-O shows the upper limit of an algorithm’s running time.

- · It tells us the slowest possible growth rate.

- · It’s used to measure worst-case performance.

- · Written as O(f(n)), where f(n) is a function of input size n.

- · It helps in comparing two algorithms easily.

- · Big-O ignores small terms and constants.

- · Example: O(n²) grows faster than O(n).

- · If an algorithm is O(n²), it means time increases quadratically with n.

- · O(1) → Constant time (fastest).

- · O(log n) → Logarithmic time (e.g., Binary Search).

- · O(n) → Linear time (e.g., Linear Search).

- · O(n log n) → Linearithmic time (e.g., Merge Sort).

- · O(n²) → Quadratic time (e.g., Bubble Sort).

- · O(2ⁿ) → Exponential time (e.g., Recursive Fibonacci).

- · Big-O helps predict performance for large inputs.

- · It defines the upper bound—the algorithm will not exceed it.

- · It’s often shown in worst-case analysis tables.

- · It’s one of the most important notations in computer science.

- · It ensures developers avoid inefficient algorithms.

- · Example summary: If a code is O(n), it means doubling n doubles the time.

2)Big-O (Upper Bound) — Detailed View

- Big-O represents the maximum possible time an algorithm can take.

- It’s the ceiling of performance growth.

- The algorithm never exceeds this growth rate.

- It’s used to guarantee performance limits.

- Example:

O(n²)→ At most proportional to n². - Even if sometimes it’s faster, it won’t be slower than O(n²).

- It’s a safe estimate for the worst performance.

- It is used in algorithm analysis and proofs.

- The function f(n) defines the upper curve of growth.

- For small n, constants may matter; for large n, they don’t.

- Big-O helps compare scalability of algorithms.

- It’s usually shown in performance charts and graphs.

- Example: In sorting algorithms, Bubble Sort = O(n²).

- Merge Sort = O(n log n), so it’s faster for big inputs.

- It shows how time increases as input size grows.

- The Big-O curve is always above or equal to actual time.

- It’s useful when predicting worst performance in systems.

- It does not depend on hardware—only logic.

- It is the most commonly used asymptotic notation.

- In simple words: Big-O = “The algorithm takes at most this much time.”

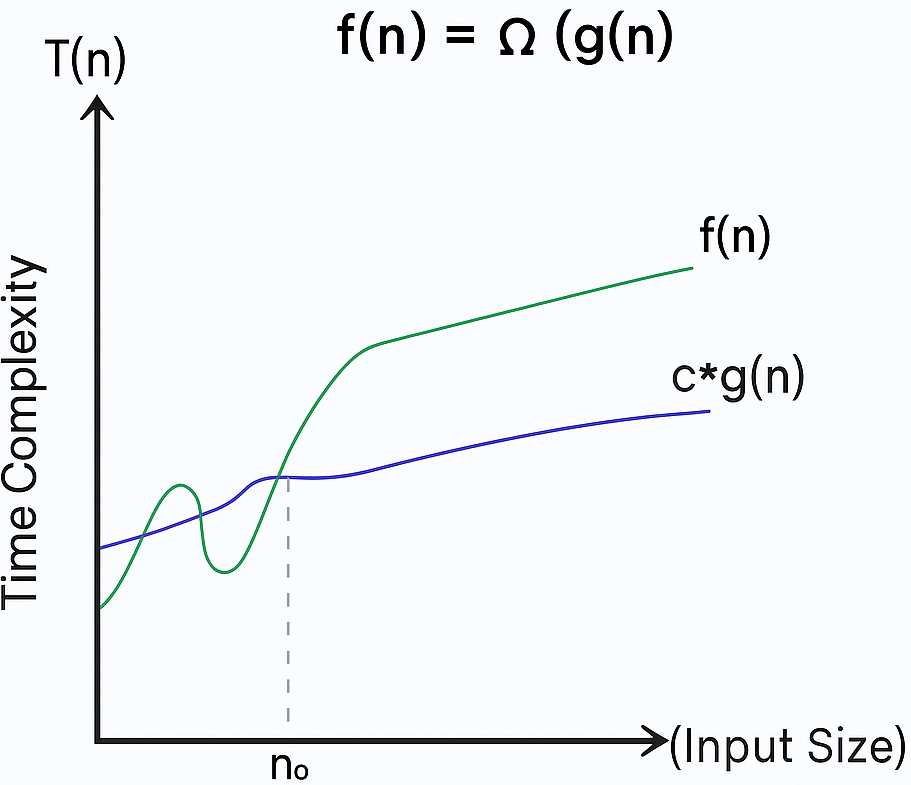

3)Omega Notation (Ω-Notation) — Best Case Time Complexity.

- In short: Ω = “The algorithm takes at least this much time.”

- Omega (Ω) notation batata hai best-case performance of an algorithm.

- It defines the minimum time an algorithm will take.

- It’s written as Ω(f(n)), where f(n) is a function of input size n.

- It shows the lower limit of an algorithm’s growth.

- Example: If an algorithm is Ω(n), it means it takes at least linear time.

- It helps in analyzing optimistic performance.

- Ω(1) → constant time in the best case.

- Ω(n) → minimum linear time needed.

- Ω(log n) → logarithmic time for best case (like Binary Search).

- It is the opposite of Big-O, which shows worst case.

- It helps understand how fast an algorithm can possibly be.

- If an algorithm is Ω(n), it can’t be faster than linear growth.

- Used when we want to estimate minimum performance guarantee.

- It gives an ideal-case performance boundary.

- For example: In Linear Search, Ω(1) (found at first element).

- In Bubble Sort, Ω(n) (best case when already sorted).

- It helps programmers optimize for average/best-case.

- It ensures we know the fastest achievable performance.

- It’s useful in complexity proofs and analysis.

4)Big-Omega(Lower Bound) — Detailed Explanation.

- In short: Big Omega = “Algorithm will take no less than this much time.”

- Big Omega defines the lowest boundary of an algorithm’s growth.

- It’s the best possible case for time complexity.

- The algorithm won’t perform better than this bound.

- Example:

Ω(n)→ Time will be at least linear. - It helps in knowing the minimum effort required.

- It’s useful when comparing best performance limits.

- In sorting algorithms: Bubble Sort → Ω(n), Merge Sort → Ω(n log n).

- It means Merge Sort will take at least n log n even in best cases.

- It represents the lower edge of the complexity graph.

- The curve of Ω lies below or touches the actual performance.

- It ensures algorithm designers know the baseline speed.

- It’s important for benchmark analysis.

- Ω is used in asymptotic analysis just like Big-O.

- The real running time of any algorithm lies between Ω and O.

- If both Ω and O are the same → algorithm has a tight bound.

- It shows the minimum limit of time complexity.

- It’s often combined with Θ (Theta) for balanced view.

- Omega gives a positive side of algorithm performance.

- It’s written as

T(n) ≥ c * f(n)for large n (where c is constant).

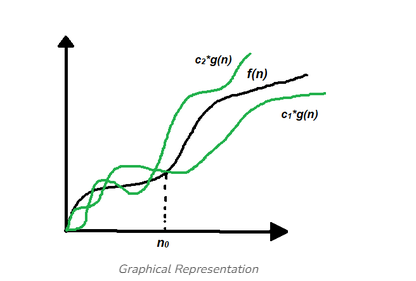

5)Theta Notation (Θ-Notation) -Average Case Time Complexity.

- In short: Θ = “Algorithm usually takes this much time.

- Theta (Θ) notation shows the average-case performance of an algorithm.

- It gives a tight bound on time complexity — both lower and upper limits.

- It means the algorithm will take approximately this much time in most cases.

- It’s written as Θ(f(n)), where f(n) is a function of input size.

- Example: If an algorithm takes Θ(n²), it means it grows roughly proportional to n².

- Θ is a combination of Big O and Big Omega.

- In other words, Θ = between the best and worst case.

- Formula: c₁·f(n) ≤ T(n) ≤ c₂·f(n) for some constants c₁, c₂.

- It represents balanced performance analysis.

- Θ(n) → linear average performance.

- Θ(log n) → logarithmic average performance.

- Θ(n log n) → efficient algorithms like Merge Sort.

- Used to show expected behavior of algorithm in normal conditions.

- If an algorithm’s best, worst, and average cases are same → use Θ.

- Example: Binary Search = Θ(log n).

- Example: Bubble Sort = Θ(n²) average case.

- It’s very useful in practical performance prediction.

- Θ helps developers know what happens most of the time.

- It’s the most realistic measure for normal input conditions.

6)Big Theta (Tight Bound) – Balanced Growth Rate

- Big Theta shows the tight bound of algorithm’s growth.

- It defines both upper and lower limits accurately.

- It tells that the algorithm’s performance is neither faster nor slower than f(n).

- Example: Θ(n²) means it grows exactly like n².

- The function f(n) is sandwiched between two constants (lower & upper).

- It’s written mathematically as:

→ ∃ constants c₁, c₂ > 0, and n₀ > 0, such that

c₁·f(n) ≤ T(n) ≤ c₂·f(n) for all n > n₀. - It gives the most accurate complexity of an algorithm.

- If Big O = Big Omega = Θ → algorithm has a perfect bound.

- It helps to describe exact rate of growth.

- Example: Merge Sort = Θ(n log n).

- Binary Search = Θ(log n).

- Linear Search = Θ(n).

- Bubble Sort = Θ(n²).

- It is the most preferred notation in asymptotic analysis.

- It shows that algorithm grows proportionally with input size.

- It’s used when performance variation is minimal.

- It represents stable and predictable algorithms.

- Θ notations help in runtime estimation accurately.

- It means the growth rate will always stay near f(n).

- In short: Big Theta = “Algorithm grows exactly at this rate.”

7)Small-o notation is written as o(f(n)).

- It represents an upper bound that is not tight.

- It means the algorithm grows slower than f(n).

- Example: If T(n) = o(n²), it means T(n) grows slower than n².

- Small-o excludes equality — only strictly less than.

- It’s like saying: “T(n) grows less quickly than f(n).”

- Used when the algorithm is more efficient than f(n).

- Formally: For every constant c > 0, there exists n₀ such that

- T(n) < c·f(n) for all n > n₀.

- It’s a loose upper limit — not exact.

- Big-O allows equality, but small-o does not.

- Example: log n = o(n)

- Example: n = o(n²)

- Example: n² ≠ o(n²) (because it’s equal, not strictly less)

- Used in mathematical proofs of algorithm growth.

- It indicates better performance than some function.

- It’s rarely used in basic algorithm analysis — mostly theoretical.

- o(f(n)) means “eventually smaller than f(n)” as n → ∞.

- It helps to express tighter comparisons between growth rates.

- It’s useful when analyzing limit-based performance.

- In short: Small-o = “grows slower than f(n), but not equal.”

In short: Small-o = “grows slower than f(n), but not equal.”

8)Small-Omega Notation (Strictly Greater Than – Lower Bound That’s Not Tight)

- In short: Small-omega = “grows faster than f(n), but not equal.”

- Small-omega notation is written as ω(f(n)).

- It represents a lower bound that is not tight.

- It means the algorithm grows faster than f(n).

- Example: T(n) = ω(n) means T(n) grows faster than n.

- It’s the opposite of small-o.

- It excludes equality — only strictly greater than.

- Used when the algorithm is less efficient than f(n).

- Formally: For every constant c > 0, there exists n₀ such that

- T(n) > c·f(n) for all n > n₀.

- It’s a loose lower limit — not exact.

- Big-Omega allows equality, but small-omega does not.

- Example: n² = ω(n) ✅

- Example: n log n = ω(n) ✅

- Example: n² ≠ ω(n²) ❌ (equal is not allowed)

- It shows that T(n) grows significantly faster than f(n).

- Used in theoretical comparisons of algorithms.

- Helps identify algorithms that are slower than a given rate.

- ω(f(n)) means “eventually greater than f(n)” as n → ∞.

- It’s often used in asymptotic proofs in complexity theory.

- Example: n² = ω(n log n).

| Notation | Symbol | Meaning | Relation Type | Example |

|---|---|---|---|---|

| Big-O | O(f(n)) | Upper Bound | ≤ or = | T(n) ≤ c·f(n) |

| Omega | Ω(f(n)) | Lower Bound | ≥ or = | T(n) ≥ c·f(n) |

| Theta | Θ(f(n)) | Tight Bound | = | c₁·f(n) ≤ T(n) ≤ c₂·f(n) |

| Small-o | o(f(n)) | Strictly Less | < | log n = o(n) |

| Small-omega | ω(f(n)) | Strictly Greater | > | n² = ω(n) |

Summary of Growth Notations:-

| Notation | Symbol | Meaning | Represents | Use Case | Mathematical Form | Example |

|---|---|---|---|---|---|---|

| Big-O | O(f(n)) | Upper Bound | Worst Case | To show the maximum growth rate | T(n) ≤ c·f(n), ∀ n ≥ n₀ | T(n) = 2n² + 3n + 1 → O(n²) |

| Big-Omega | Ω(f(n)) | Lower Bound | Best Case | To show the minimum growth rate | T(n) ≥ c·f(n), ∀ n ≥ n₀ | T(n) = 2n² + 3n + 1 → Ω(n²) |

| Big-Theta | Θ(f(n)) | Tight Bound | Average Case | When both upper and lower bounds are same | c₁·f(n) ≤ T(n) ≤ c₂·f(n) | T(n) = 2n² + 3n + 1 → Θ(n²) |

| Small-o | o(f(n)) | Strictly Less | Loosely Faster | When T(n) grows slower than f(n) | ∀ c > 0, ∃ n₀ s.t. T(n) < c·f(n) | log n = o(n) |

| Small-Omega | ω(f(n)) | Strictly Greater | Loosely Slower | When T(n) grows faster than f(n) | ∀ c > 0, ∃ n₀ s.t. T(n) > c·f(n) | n² = ω(n) |

For Visual Understanding (in Words):-

Imagine a ladder of growth from slowest to fastest

O(1) → O(log n) → O(n) → O(n log n) → O(n²) → O(n³) → O(2ⁿ) → O(n!)

Now —

- Big-O (O): says “T(n) grows no faster than this level.”

- Big-Omega (Ω): says “T(n) grows no slower than this level.”

- Big-Theta (Θ): says “T(n) grows exactly at this level.”

- Small-o (o): says “T(n) grows strictly slower than this level.”

- Small-Omega (ω): says “T(n) grows strictly faster than this level.”

Real-World Example

Let’s take a car race :-

| Concept | Meaning in Race |

|---|---|

| Big-O (O) | The maximum speed limit the car can reach. |

| Big-Omega (Ω) | The minimum speed the car maintains. |

| Big-Theta (Θ) | The average consistent speed of the car. |

| Small-o (o) | A car that’s slower than another, but never matches it. |

| Small-Omega (ω) | A car that’s faster than another, but never equal. |

FOR recap:-

- Learning these helps you optimize your code and predict scalability.

- Asymptotic notations help compare algorithm efficiency.

- Big-O focuses on upper limit / worst case.

- Omega (Ω) focuses on lower limit / best case.

- Theta (Θ) gives exact growth (tight bound).

- Small-o means strictly smaller growth.

- Small-Omega (ω) means strictly larger growth.

- Together, they form a complete asymptotic analysis toolkit.

- These notations are used to ignore constants and lower-order terms.

- They help compare algorithms independent of hardware or environment.